TCP #94: Fast CI/CD Won't Fix Your Slow Deployments

It's not your tooling. It's not your pipeline.

You can also read my newsletters from the Substack mobile app and be notified when a new issue is available.

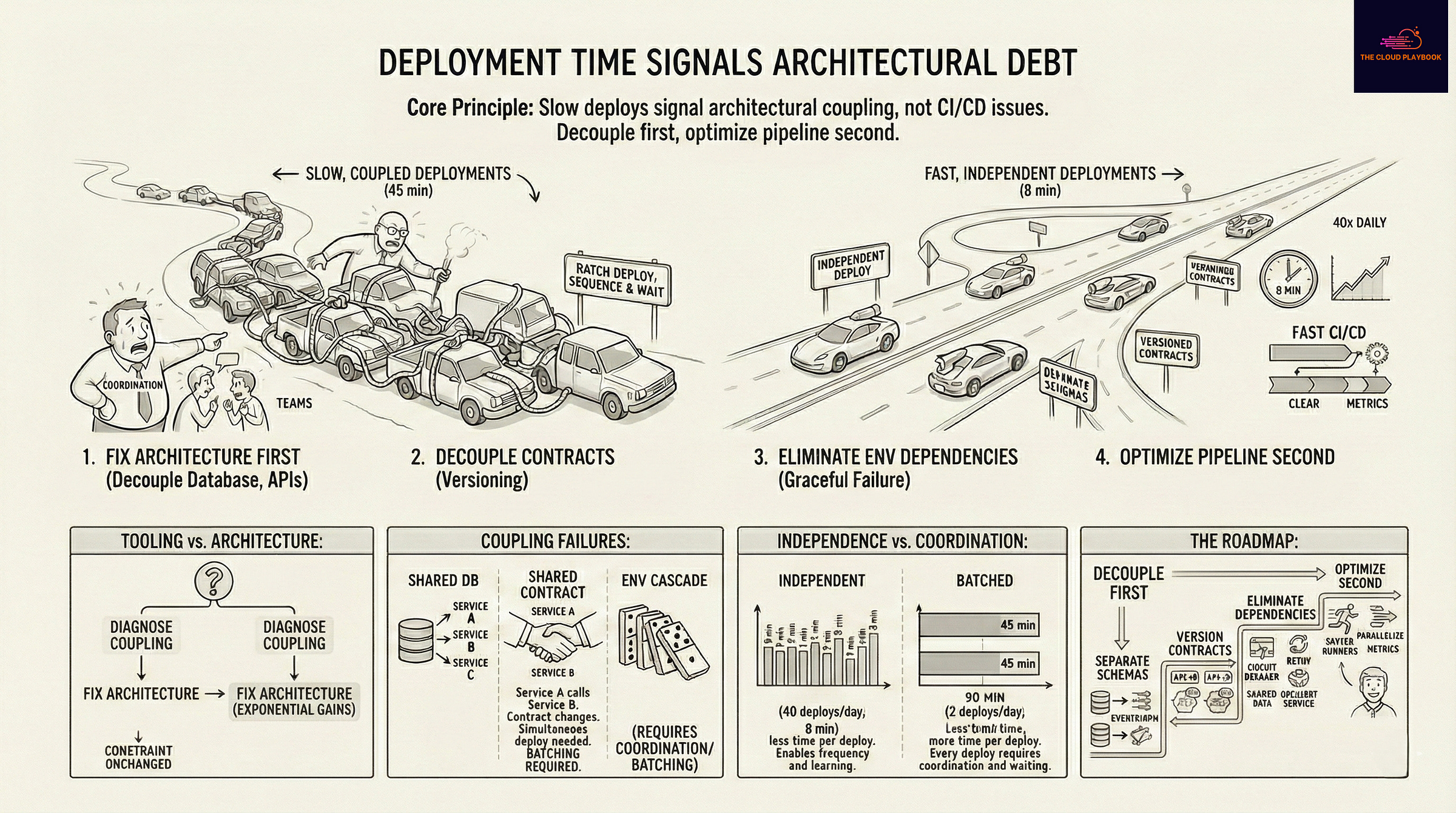

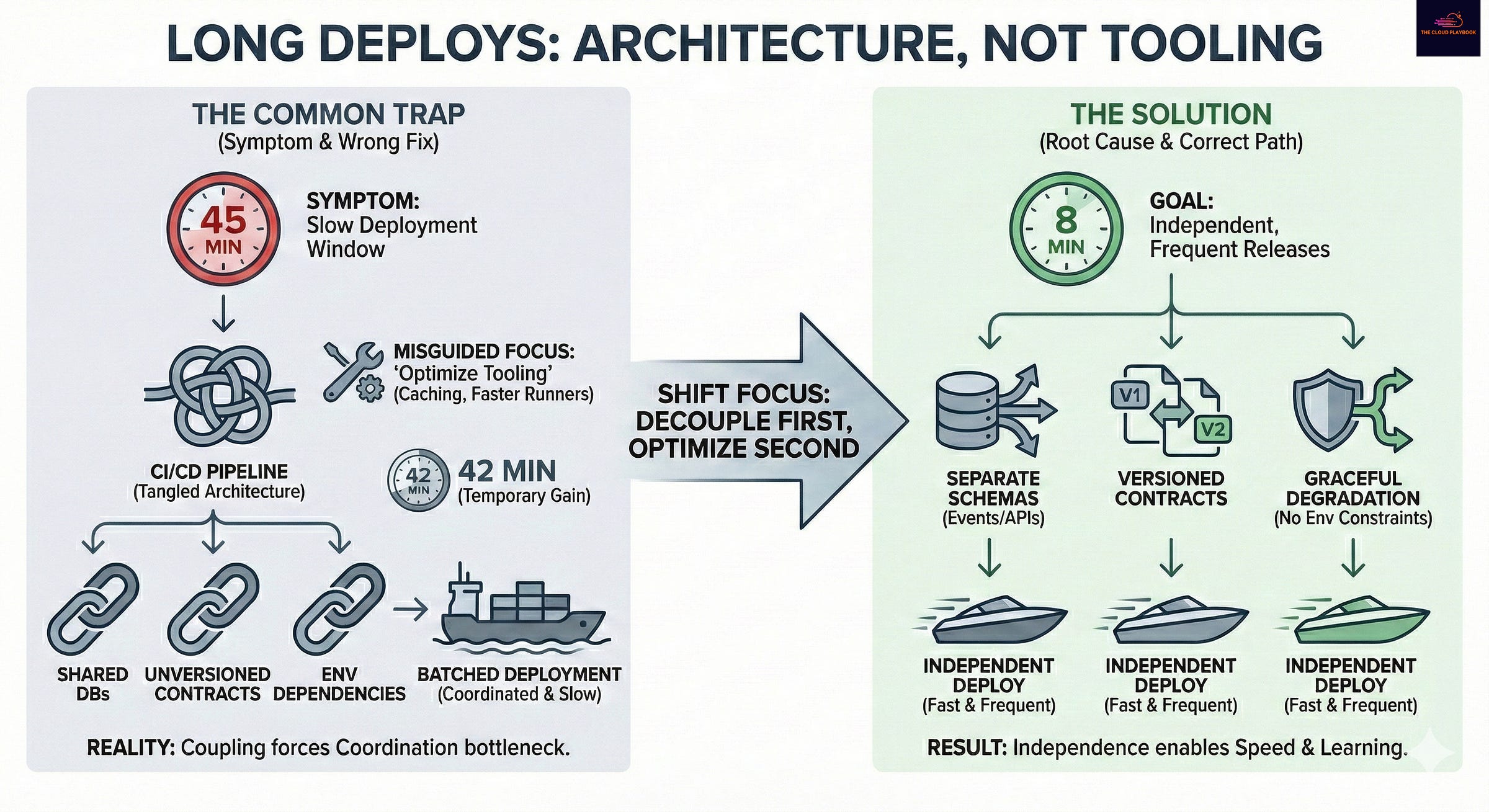

Your deployment window hits 45 minutes. Engineering complains. Product asks why shipping takes so long. Someone opens a ticket to optimize the CI/CD pipeline.

This surfaces as a tooling problem.

It is not.

Optimize the Pipeline

Teams audit their build system. They add layer caching to Docker builds. They parallelize test suites. They upgrade to faster runners.

The deploy time drops to 38 minutes. Then 42. Then back to 47 as the codebase grows.

Leadership approves the platform team's budget. The platform team instruments the pipeline. They generate graphs showing which steps take the longest. Test execution accounts for 18 minutes, image builds take 12, deployment rollout takes 15, and health checks take 5.

They optimize each stage. Deployments now take 46 minutes.

The underlying problem compounds.

Architectural Coupling at the Deployment Boundary

The 45-minute deploy is not a CI/CD problem. It is an architectural coupling problem that manifests at the deployment boundary.

Services share deployment fate. Service A does not deploy until Service B deploys. Service B does not deploy until the shared library updates. The shared library does not update until someone coordinates the rollout across 8 consuming services.

Which means no one deploys independently.

Every change requires sequencing. Every sequence requires coordination. Every coordination adds time.

The coupling creates three failure modes.

First, shared databases. Multiple services write to the same schema. A migration in one service breaks queries in another. Teams coordinate deploys to avoid breaking production. The coordination forces a deployment window. The window grows as more services join the dependency graph.

Second, shared contracts without versioning. Service A calls Service B. The contract changes. Both services must deploy simultaneously, or the integration breaks. Teams bundle the changes. The bundle increasesthe batch size. Batch size determines cycle time.

Third, environment-level dependencies. Service A requires Service B to be healthy before it starts. Service B requires Service C. The cascade means deployments happen serially. Serial execution guarantees long windows.

Teams see the 45-minute symptom. They conclude the tooling is slow. They measure stage duration and optimize execution.

The diagnosis is wrong.

The tooling executes the deployment graph. The graph topology determines the critical path. Optimizing execution without changing topology yields linear gains on an exponential problem.

A service coupled to 4 other services requires coordinating 5 deployments. Coordinating 5 deployments requires agreeing on timing, validating compatibility, sequencing rollout, and monitoring health across the boundary.

This coordination is for 45 minutes.

Faster CI/CD runs the same coordination in 42 minutes. The coordination still exists. The coupling still exists. The constraint is unchanged.

Speed is easy. Predictability is hard. I build platforms delivering both.

Coupling or Tooling?

Ask this question. Does any service deploy independently without coordinating with another team?

If the answer is no, you have coupling. If the answer is yes for some services and no for others, you have partial coupling. If the answer is yes for all services, you have a tooling problem.

Most teams answer no. They have convinced themselves that the coupling is necessary. The database must be shared for consistency. The contract must be synchronized for correctness. The environment must be coordinated for stability.

Wrong on all three counts.

Track how many services are deployed in your 45-minute window. If the answer is more than one, you are batching. Batching is coordination. Coordination is coupling.

High-performing teams deploy one service at a time. Each deploy takes 8 minutes. They deploy 40 times per day. No single deploy exceeds 10 minutes.

Low-performing teams deploy 6 services together. Each combined deploy takes 45 minutes. They deploy twice per day. Every deploy takes 45 minutes.

The high-performing team spends more total time deploying. They spend less time per deploy.

The difference is independence. Independence enables frequency. Frequency enables learning. Learning enables speed.

Decouple the Architecture First

Fix the architecture before you fix the pipeline.

Start with the database. Separate schemas. Give each service its own tables. If services need to share data, use events or APIs. Events create temporal decoupling. APIs create interface contracts. Both eliminate deployment coordination.

Migration is a one-time cost. Coupling is recurring. You recover the investment in quarters.

Next, version your contracts. Service A calls Service B using version 2 of the API. Service B deploys version 3. Service A continues using version 2. Service B supports both versions temporarily. Service A migrates when ready.

No coordination required.

Contract versioning adds complexity. The complexity is local to each service. Deployment coordination adds complexity across the organization. Organizational complexity scales worse than technical complexity.

Finally, eliminate environment-level dependencies. Service A should start even if Service B is down. Service A degrades gracefully. It retries. It uses a circuit breaker. It serves cached data.

Degradation is preferable to coupling.

Build services with failure modes. The code is local. The alternative is deployment coupling. Deployment coupling is global.

Once services are decoupled, optimize the pipeline. Faster builds matter when each service deploys independently 10 times per day. Faster builds do not matter when 6 services deploy together twice per day.

The constraint is not the build. The constraint is the coordination.

Deployment Time Signals Architectural Debt

Deployment time is a lagging indicator of architectural coupling. Teams see the lag and optimize the tooling.

The tooling is downstream of the architecture. Optimizing downstream of the constraint yields diminishing returns.

The pattern most teams miss is this. When deploys take 45 minutes, the problem is not in the CI/CD system. The problem is in the dependency graph. The dependency graph is an architectural choice.

Architectural choices require architectural solutions.

Treat slow deploys as a signal. The signal indicates coupling.

Decouple first. Optimize second.

Whenever you’re ready, there are 2 ways I can help you:

Free guides and helpful resources: https://thecloudplaybook.gumroad.com/

Get certified as an AWS AI Practitioner in 2026. Sign up today to elevate your cloud skills. (link)

That’s it for today!

Did you enjoy this newsletter issue?

Share with your friends, colleagues, and your favorite social media platform.

Until next week — Amrut

Get in touch

You can find me on LinkedIn or X.

If you would like to request a topic to read, please feel free to contact me directly via LinkedIn or X.