TCP #73: How One Billing Audit Uncovered $34,164 in Annual Private Link Waste (and the systematic approach to eliminate it)

Private Link Service Pricing Optimization: The Complete Cost Control Guide

You can also read my newsletters from the Substack mobile app and be notified when a new issue is available.

As a founding member, you will receive:

Everything included in paid subscriber benefits + exclusive toolkits and templates.

High-quality content from my 11+ years of industry experience, where I solve specific business problems in the real world using AWS Cloud. Learn from my actionable insights, strategies, and decision-making process.

Quarterly report on emerging trends, AWS updates, and cloud innovations with strategic insights.

Public recognition in the newsletter under the “Founding Member Spotlight” section.

Early access to deep dives, case studies, and special reports before they’re released to paid subscribers.

Last month, I received a cloud bill that made me question everything I thought I knew about Private Link pricing.

Our supposedly "optimized" infrastructure was bleeding $2,847 monthly on endpoint services alone.

What started as a routine cost review turned into a comprehensive audit that revealed systematic inefficiencies across our entire Private Link deployment.

The deeper I dug, the more I realized that Private Link's pricing model punishes the unprepared.

Unlike other AWS services where costs scale predictably with usage, Private Link has hidden multipliers that can turn a $100 monthly service into a $1,000 surprise.

In today’s newsletter, I break down the complete optimization playbook I developed, covering everything from basic cost hygiene to advanced architectural patterns that can reduce your Private Link spend by 60% or more.

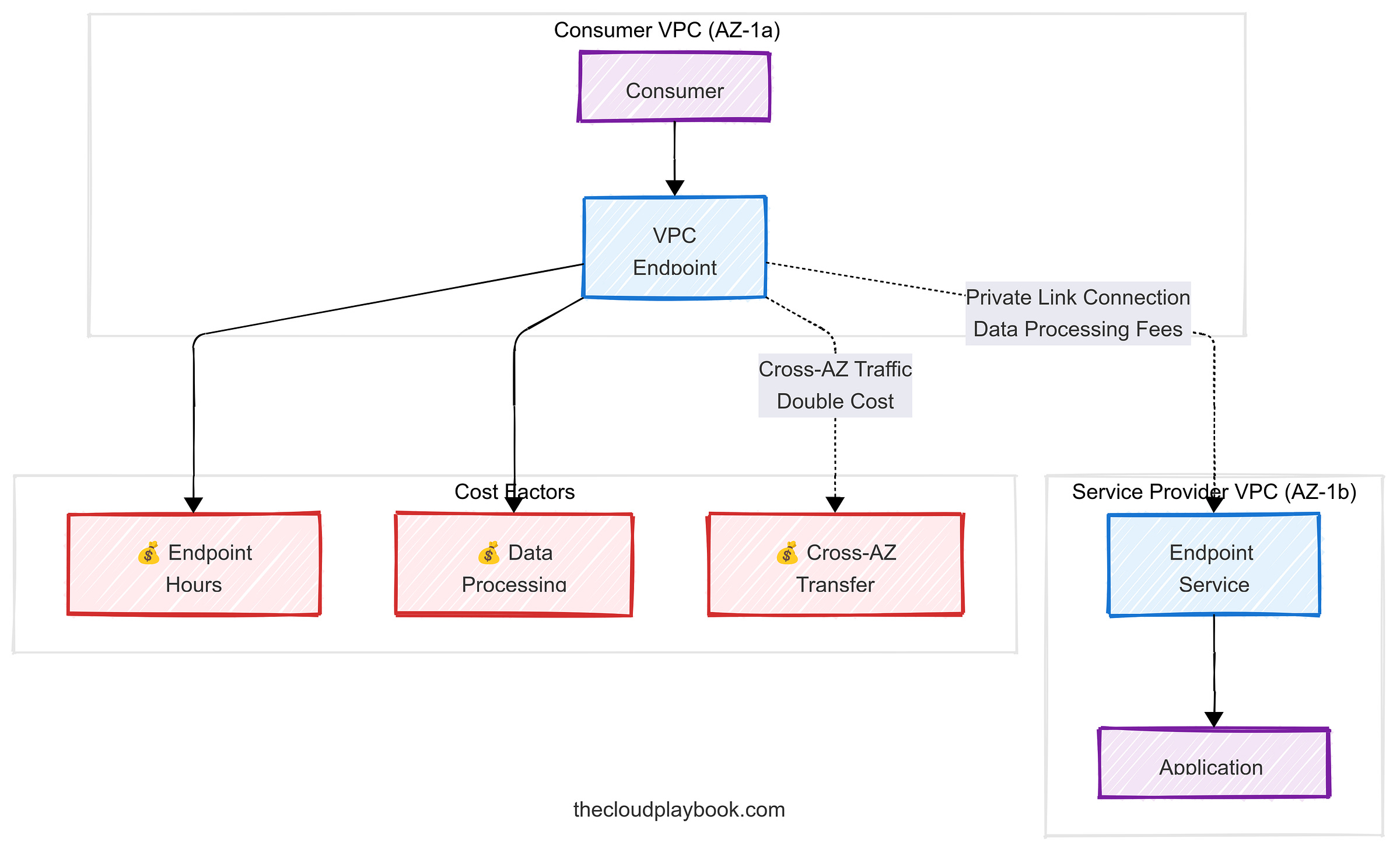

The Three-Layer Cost Structure Nobody Explains

Most teams focus solely on endpoint hours when evaluating the costs of Private Link. This narrow view misses two critical cost centers that often dwarf the base pricing:

Layer 1: Endpoint Hours (The Visible Cost)

Every Private Link endpoint incurs a charge of $0.01 per hour, regardless of usage. This translates to $7.30 monthly per endpoint—manageable for a few services, devastating when you're running 50+ endpoints across multiple environments.

Layer 2: Data Processing (The Usage Multiplier)

The $0.01 per GB processing fee applies to all data flowing through your endpoints. High-throughput applications can generate processing costs 10x higher than the base endpoint fees. I've seen API gateways rack up $800 monthly in processing charges alone.

Layer 3: Cross-AZ Transfer (The Hidden Killer)

When your Private Link endpoint resides in a different Availability Zone than your consumer, AWS charges standard cross-AZ transfer fees on top of processing costs. This double taxation can add 50-100% to your total Private Link bill.

Understanding these layers is crucial because optimization strategies vary dramatically based on which layer dominates your costs.

The Idle Endpoint Audit: Your First $1,000+ Savings

Start with the low-hanging fruit. Most organizations run 30-50% more endpoints than they actively need, primarily due to abandoned development environments and over-provisioned staging setups.

The Systematic Approach:

Begin by implementing comprehensive tagging across all endpoints. Use these mandatory tags: Environment, Owner, Project, LastUsed, and BusinessCritical. This metadata becomes essential for automated cleanup and cost allocation.

Next, establish usage baselines. CloudWatch metrics for NetworkPacketsIn and NetworkPacketsOut reveal actual endpoint utilization. Any endpoint showing zero traffic for 7+ days becomes a candidate for removal or scheduling.

Scheduling Non-Production Endpoints:

Development and staging environments typically do not require 24/7 availability. Implementing automated scheduling can reduce non-production endpoint costs by 65-75%.

The most effective pattern involves Lambda functions triggered by EventBridge rules. Create endpoints at 8 AM on weekdays, and delete them at 6 PM. For complex environments with dependencies, use Step Functions to orchestrate the sequence of steps.

Edge Case: Shared Development Environments

Teams spanning multiple time zones complicate scheduling strategies. Instead of complex scheduling, consider consolidating multiple dev environments behind a single, always-on endpoint with internal routing logic. This approach reduces costs while maintaining developer productivity.

Data Processing Optimization: Controlling the Usage Multiplier

Data processing fees scale linearly with throughput, making high-volume applications the primary targets for cost optimization. The key insight: not all traffic needs to flow through Private Link endpoints.

Traffic Pattern Analysis:

Start by categorizing your endpoint traffic into four buckets:

Critical secure communications (compliance-required Private Link usage)

High-volume routine operations (batch jobs, log streaming)

Interactive user traffic (API calls, web requests)

Background maintenance tasks (health checks, monitoring)

Each category requires different optimization strategies.

Selective Routing Strategies:

For applications generating mixed traffic types, implement intelligent routing at the application layer. Route compliance-sensitive traffic through Private Link while directing high-volume, less-sensitive operations through standard internet gateways or VPC peering connections.

This hybrid approach can reduce data processing costs by 40-60% while maintaining security requirements. The implementation requires careful traffic classification and routing logic, but the cost savings justify the complexity for high-throughput applications.

Compression and Batching:

Before implementing complex routing changes, evaluate opportunities for data compression. Applications that transfer JSON payloads or log data can achieve compression ratios of 60-80%, directly reducing processing fees.

Batching strategies prove equally effective. Instead of processing individual API calls through Private Link, batch multiple operations into a single request. This approach works particularly well for database operations and file transfers.

Cross-AZ Optimization: Eliminating Double Taxation

Cross-AZ data transfer represents the most overlooked cost optimization opportunity. Many teams inadvertently deploy Private Link endpoints in different availability zones (AZs) from their primary consumers, triggering both processing and transfer fees.

Deployment Topology Analysis:

Map your current endpoint and consumer topology. Use AWS Config or custom scripts to identify cross-AZ relationships. Focus on endpoints handling more than 10 GB monthly—these generate the highest cross-AZ transfer costs.

Strategic AZ Placement:

For applications with consumers distributed across multiple availability zones (AZs), the optimal strategy depends on the traffic patterns. If 70 %+ of traffic originates from a single AZ, deploy your endpoint there and accept cross-AZ costs for the minority traffic.

For evenly distributed traffic, consider deploying multiple endpoints in different availability zones (AZs) with application-level load balancing. This approach eliminates cross-AZ transfer fees but increases endpoint hours costs. The break-even point typically occurs at around 50 GB of monthly throughput per availability zone (AZ).

Multi-Region Considerations:

Cross-region Private Link deployments add another cost layer. Regional pricing variations can create arbitrage opportunities. US-East-1 offers the lowest endpoint pricing at $0.01/hour, while some Asia-Pacific regions charge 50% more.

For latency-tolerant applications, centralizing endpoints in low-cost regions can reduce total costs. However, carefully evaluate the latency-cost tradeoff for user-facing applications.

Advanced Architectural Patterns

Shared Endpoint Consolidation:

The most powerful cost optimization technique involves consolidating multiple services behind shared endpoints. Instead of deploying separate endpoints for each microservice, implement a single endpoint with intelligent internal routing.

This pattern works exceptionally well for API-based services where routing decisions can be made based on request headers, paths, or other application-layer information. The implementation requires more sophisticated load balancing and service discovery, but can reduce endpoint costs by 80% or more.

Dynamic Endpoint Provisioning:

For applications with predictable traffic patterns, consider dynamic endpoint provisioning. Use CloudWatch metrics and Lambda functions to create endpoints during high-traffic periods and remove them during low-usage windows.

This approach requires careful orchestration to avoid service interruptions, but can reduce costs by 30-50% for applications with clear usage patterns. Implement health checks and graceful failover mechanisms to ensure reliability.

Service Mesh Integration:

Advanced teams can integrate Private Link optimization with service mesh architectures. Use Istio or AWS App Mesh to implement intelligent traffic routing, automatically directing traffic through the most cost-effective path based on real-time cost and performance metrics.

Monitoring and Alerting Framework

Effective cost control requires comprehensive monitoring beyond basic CloudWatch metrics. Implement custom metrics tracking cost per GB, endpoint utilization rates, and cost trends over time.

Key Metrics to Track:

Daily data processing costs per endpoint

Cross-AZ transfer ratios and costs

Endpoint utilization rates (active vs. idle time)

Cost per transaction or API call

Regional cost variations for multi-region deployments

Alerting Strategies:

Set up proactive alerts for cost anomalies. Alert when daily processing costs exceed baseline by 50% or when new endpoints remain idle for more than 48 hours. These early warning systems prevent small issues from becoming major cost overruns.

Cost Attribution:

Implement detailed cost allocation using tags and custom billing reports. Break down costs by team, project, environment, and business unit. This granular visibility enables targeted optimization efforts and accountability.

Compliance and Security Considerations

Cost optimization efforts must strike a balance between financial efficiency and security and compliance requirements. Not every optimization technique applies to regulated environments or applications that handle sensitive data.

Compliance-First Optimization:

For regulated workloads, focus on optimization strategies that maintain security posture:

Endpoint scheduling for non-production environments

Data compression and batching

Strategic AZ placement

Monitoring and alerting improvements

Avoid consolidating shared endpoints or implementing dynamic provisioning for compliance-critical applications unless your security team explicitly approves these patterns.

Security Impact Assessment:

Evaluate the security implications of each optimization strategy. Shared endpoints increase the blast radius of security incidents but may be acceptable for internal services with similar security requirements. Document these trade-offs and obtain approval from the security team for significant architectural changes.

Implementation Roadmap

Week 1: Discovery and Baseline

Implement comprehensive endpoint tagging

Establish current cost baselines and traffic patterns

Identify idle and underutilized endpoints

Set up enhanced monitoring and alerting

Week 2-3: Quick Wins

Remove or schedule idle endpoints

Implement cross-AZ optimization for high-traffic endpoints

Deploy data compression for applicable services

Establish cost allocation and reporting

Week 4-6: Advanced Optimization

Evaluate shared endpoint consolidation opportunities

Implement selective routing for mixed traffic patterns

Deploy dynamic provisioning for predictable workloads

Integrate with existing service mesh or load balancing infrastructure

Ongoing: Continuous Optimization

Monthly cost reviews and trend analysis

Quarterly architecture reviews for new optimization opportunities

Regular validation of optimization effectiveness

Team training on cost-conscious Private Link usage patterns

Final Thoughts

Private Link pricing optimization requires a systematic approach combining cost awareness, architectural discipline, and continuous monitoring.

The techniques outlined in this newsletter can reduce Private Link costs by 40-70% while maintaining security and reliability requirements.

The key insight: Private Link costs are highly dependent on architecture.

Small deployment decisions compound into significant cost differences over time. Teams that embed cost considerations into their Private Link design process consistently achieve better financial outcomes than those attempting post-deployment optimization.

Start with the quick wins. Idle endpoint removal and cross-AZ optimization, then gradually implement more sophisticated patterns as your team develops expertise with Private Link cost dynamics.

Your cloud bill will thank you.

What's your biggest Private Link cost challenge? Reply and share your optimization wins. I read every response and often feature the best insights in future newsletters.

SPONSOR US

The Cloud Playbook is now offering sponsorship slots in each issue. If you want to feature your product or service in my newsletter, explore my sponsor page

That’s it for today!

Did you enjoy this newsletter issue?

Share with your friends, colleagues, and your favorite social media platform.

Until next week — Amrut

Get in touch

You can find me on LinkedIn or X.

If you would like to request a topic to read, please feel free to contact me directly via LinkedIn or X.